Research Review 2023

Moving Explainable Artificial Intelligence (XAI) from Research to Practice: Defining and Validating an XAI Process Framework

While advances in deep learning have led to exciting computer vision and natural language capabilities, they also amplify existing challenges in working with opaque models. A lack of transparency about artificial intelligence (AI) decision-making processes can make it challenging for end users to determine whether AI system suggestions are right or wrong, or to build justified trust in system outputs. Research into explainable AI (XAI) aims to address a lack of system transparency by providing humans with explanations they can use to reason about AI predictions and behaviors. Our project highlights the CMU SEI’s work moving XAI techniques from research to practice.

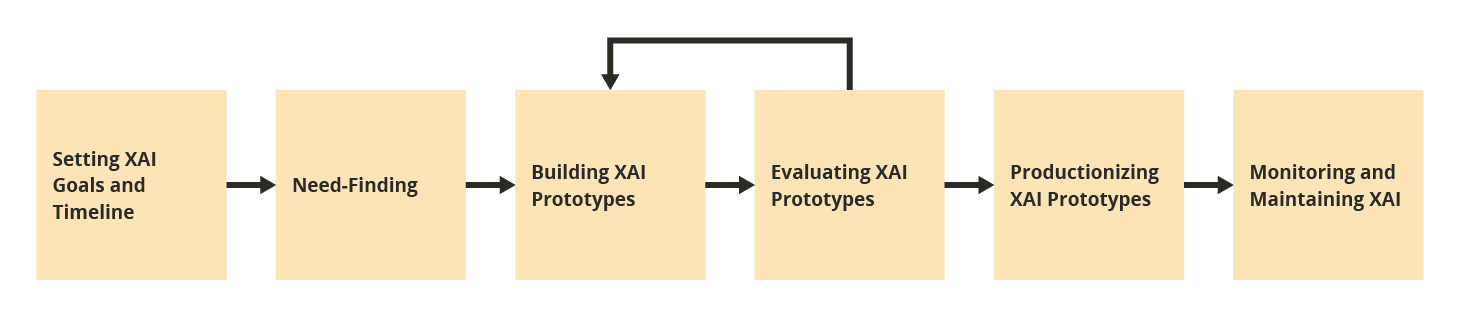

Through our two-year explainability project, our team of Carnegie Mellon University Software Engineering Institute (CMU SEI) and Human-Computer Interaction Institute (HCII) researchers is investigating practical methods for implementing XAI through a case study implementing explainability for real-world computer vision algorithms. Our work proposes a six-stage process framework that AI practitioner teams can employ to meet end user needs, prototype and evaluate potential explanations, and maintain explainability software in the long term.

We aim to address the growing need for transparency in mission systems by proposing and validating a process that can be used by AI practitioner teams to introduce explainability within their systems.

Associate Software Developer

Our project’s activities include completing

- a literature survey of existing process frameworks and perspectives on practical XAI implementation

- a case study building explanations for computer vision algorithms used by wildlife conservation nonprofit Wild Me

- a case study building explanations for an AI system designed for the mission space

We will capture our lessons learned through a process framework that we will share with both the XAI research community and stakeholders from the defense and intelligence communities. We are currently in the needfinding stage of our collaboration with Wild Me during which we are identifying current challenges and opportunities related to system transparency. While this case study focuses on a conservation use case, algorithms for classifying input images or identifying individuals are relevant to sponsors working on systems for use in the mission space who plan to vet system outputs.

Our team is interested in the challenges of implementing responsible AI principles and identifying U.S. Department of Defense (DoD)- relevant solutions. We are currently looking for DoD collaborators for our second case study, computer vision systems for the mission space, that could benefit from explainability. Our framework will enable those charged with making decisions the use of AI systems in the mission space by providing them with methods for defining XAI requirements and ensuring that AI practitioners follow a standardized process for implementing XAI. We aim to address the growing need for transparency in mission systems by proposing and validating a process that can be used by AI practitioner teams to introduce explainability within their systems.

In Context: This FY2023-24 Project

- leverages CMU SEI and CMU HCII expertise in designing human-centered systems using techniques such as model calibration, interface design, and explainability methods

- aligns with the CMU SEI technical objective to be trustworthy in construction and implementation and resilient in the face of operational uncertainties

- aligns with the OUSD(R&E) critical technology priority of developing trusted AI and autonomy and addresses the DoD’s Ethical Principles in AI

Principal Investigator

Principal Investigator

Violet Turri

Associate Software Developer

SEI Collaborators

Katie Robinson

Associate Design Researcher

Dr. Rachel Dzombak

Special Advisor to the Director, AI Division

Collin Abidi

Associate Software Developer

CMU Collaborators

Dr. Jodi Forlizzi

Herbert A. Simon Professor in Computer Science and HCII, and the Associate Dean for Diversity, Equity, and Inclusion in the School of Computer Science

Dr. Adam Perer

Assistant Professor CMU HCII

Katelyn Morrison

PhD Student, CMU HCII

Abby Chen

Undergrad Student, CMU HCII

Other Collaborators

Jason Holmberg

Executive Director

Wild Me

Watch the Recording

Have a Question?

Reach out to us at info@sei.cmu.edu.